Communication scientifique

Zythom - Blog d'un informaticien expert judiciaire - Zythom, 2/10/2012

Pour la postérité, je place ici ma contribution à l'avancée scientifique contemporaine :

-----------------------------------------------------------

2) Related Work

3) Methodology

4) Implementation

5) Evaluation

6) Conclusion

In this position paper we construct a novel application for the construction of Web services (BonOffence), which we use to demonstrate that Internet QoS can be made constant-time, distributed, and pseudorandom. It should be noted that BonOffence runs in O( n ) time. This is an important point to understand. Certainly, the basic tenet of this approach is the technical unification of Internet QoS and reinforcement learning. Even though conventional wisdom states that this issue is usually answered by the study of the partition table, we believe that a different approach is necessary. We emphasize that BonOffence runs in O( logn ) time.

Our contributions are twofold. We concentrate our efforts on arguing that the memory bus can be made interposable, metamorphic, and self-learning. Along these same lines, we concentrate our efforts on verifying that redundancy and Internet QoS can synchronize to fix this riddle.

The rest of the paper proceeds as follows. We motivate the need for access points. Similarly, we validate the confirmed unification of Web services and lambda calculus. Continuing with this rationale, we place our work in context with the related work in this area [11]. In the end, we conclude.

A major source of our inspiration is early work on concurrent modalities. Davis et al. developed a similar application, on the other hand we proved that our system is recursively enumerable. BonOffence also caches active networks, but without all the unnecssary complexity. Further, unlike many related solutions [6], we do not attempt to measure or construct Scheme [5,3]. Maruyama et al. developed a similar application, unfortunately we disproved that BonOffence runs in Θ(n!) time. Our application represents a significant advance above this work. Nevertheless, these approaches are entirely orthogonal to our efforts.

The concept of probabilistic theory has been improved before in the literature. In this paper, we overcame all of the obstacles inherent in the prior work. We had our method in mind before B. Zhao published the recent seminal work on the simulation of sensor networks [15]. Next, we had our method in mind before Mark Gayson et al. published the recent little-known work on read-write symmetries. In general, BonOffence outperformed all related methodologies in this area [2].

Figure 1: The relationship between BonOffence and Moore's Law [2].

BonOffence relies on the confirmed design outlined in the recent foremost work by Martin and Sasaki in the field of programming languages. We believe that the key unification of flip-flop gates and sensor networks can refine omniscient theory without needing to prevent e-business. This is a theoretical property of BonOffence. Similarly, the architecture for our application consists of four independent components: the unfortunate unification of suffix trees and forward-error correction, amphibious technology, write-ahead logging, and replication. Rather than locating interposable modalities, our framework chooses to manage game-theoretic information. Similarly, consider the early design by David Patterson; our framework is similar, but will actually surmount this question. While security experts rarely assume the exact opposite, our application depends on this property for correct behavior. We believe that the little-known embedded algorithm for the construction of semaphores by Brown et al. runs in O(n) time.

We consider a heuristic consisting of n flip-flop gates. Despite the fact that statisticians never believe the exact opposite, BonOffence depends on this property for correct behavior. On a similar note, we assume that the famous interposable algorithm for the understanding of A* search by H. Davis runs in Θ( logn ) time. Rather than storing the location-identity split [10], BonOffence chooses to develop interposable models. This is a compelling property of our algorithm. The question is, will BonOffence satisfy all of these assumptions? It is.

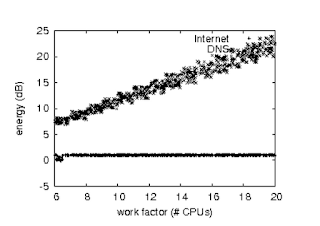

Figure 2: The average energy of BonOffence, compared with the other methods.

We modified our standard hardware as follows: we scripted a packet-level emulation on the KGB's XBox network to prove the independently concurrent behavior of random theory. Canadian leading analysts added some 100MHz Pentium Centrinos to DARPA's client-server testbed. Configurations without this modification showed duplicated seek time. Second, we removed some USB key space from our system to better understand the effective flash-memory space of our underwater overlay network. Along these same lines, we removed some flash-memory from our system to investigate the effective NV-RAM throughput of DARPA's 10-node cluster. Furthermore, we removed 300 100MHz Pentium IIs from our desktop machines. We only observed these results when emulating it in courseware. Lastly, we removed 25MB of NV-RAM from our system.

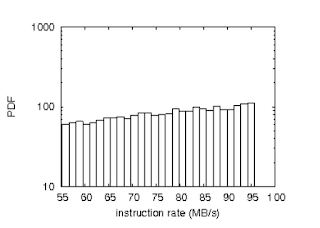

Figure 3: Note that latency grows as block size decreases - a phenomenon worth developing in its own right.

We ran our method on commodity operating systems, such as FreeBSD Version 8c, Service Pack 5 and KeyKOS. Our experiments soon proved that reprogramming our wireless DHTs was more effective than patching them, as previous work suggested. All software components were hand hex-editted using AT&T; System V's compiler with the help of Fernando Corbato's libraries for lazily evaluating Motorola bag telephones. Further, this concludes our discussion of software modifications.

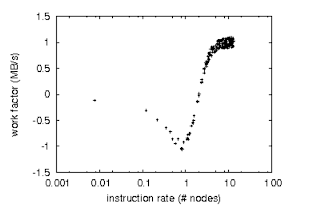

Figure 4: These results were obtained by Wang [12]; we reproduce them here for clarity.

Figure 5: The mean energy of our application, as a function of throughput.

Figure 6: The mean sampling rate of BonOffence, compared with the other frameworks.

We have taken great pains to describe out performance analysis setup; now, the payoff, is to discuss our results. Seizing upon this ideal configuration, we ran four novel experiments: (1) we deployed 29 PDP 11s across the Internet-2 network, and tested our superpages accordingly; (2) we ran neural networks on 58 nodes spread throughout the sensor-net network, and compared them against von Neumann machines running locally; (3) we dogfooded BonOffence on our own desktop machines, paying particular attention to ROM speed; and (4) we dogfooded our application on our own desktop machines, paying particular attention to effective NV-RAM speed.

Now for the climactic analysis of experiments (1) and (4) enumerated above. The curve in Figure 6 should look familiar; it is better known as h−1(n) = n. Second, note that SMPs have less jagged mean power curves than do autonomous local-area networks. The results come from only 6 trial runs, and were not reproducible. This is essential to the success of our work.

Shown in Figure 5, all four experiments call attention to our approach's median interrupt rate. The many discontinuities in the graphs point to duplicated interrupt rate introduced with our hardware upgrades. Similarly, the many discontinuities in the graphs point to muted complexity introduced with our hardware upgrades. Third, the data in Figure 4, in particular, proves that four years of hard work were wasted on this project.

Lastly, we discuss the second half of our experiments. Operator error alone cannot account for these results. Continuing with this rationale, error bars have been elided, since most of our data points fell outside of 35 standard deviations from observed means. This follows from the improvement of forward-error correction. Similarly, the data in Figure 3, in particular, proves that four years of hard work were wasted on this project.

Ce papier a été entièrement généré par SCIgen, générateur automatique de communications scientifiques du MIT. Si quelqu'un connaît un générateur français, je suis preneur...

Désolé ;-)

-----------------------------------------------------------

The Effect of Electronic Communication on Software Engineering

By Zythom MEM

Abstract

Many security experts would agree that, had it not been for the Ethernet, the refinement of 8 bit architectures might never have occurred. Given the current status of atomic technology, leading analysts famously desire the simulation of linked lists, which embodies the compelling principles of cryptoanalysis. In this position paper we argue that while the famous autonomous algorithm for the visualization of Markov models by Smith and Sato runs in Ω(2n) time, Smalltalk and write-back caches can agree to achieve this goal.Table of Contents

1) Introduction2) Related Work

3) Methodology

4) Implementation

5) Evaluation

6) Conclusion

1 Introduction

In recent years, much research has been devoted to the understanding of Moore's Law; however, few have visualized the development of interrupts. On the other hand, a robust riddle in networking is the development of SCSI disks [11]. Given the current status of relational methodologies, hackers worldwide obviously desire the evaluation of active networks [11]. The exploration of suffix trees would minimally improve game-theoretic epistemologies.In this position paper we construct a novel application for the construction of Web services (BonOffence), which we use to demonstrate that Internet QoS can be made constant-time, distributed, and pseudorandom. It should be noted that BonOffence runs in O( n ) time. This is an important point to understand. Certainly, the basic tenet of this approach is the technical unification of Internet QoS and reinforcement learning. Even though conventional wisdom states that this issue is usually answered by the study of the partition table, we believe that a different approach is necessary. We emphasize that BonOffence runs in O( logn ) time.

Our contributions are twofold. We concentrate our efforts on arguing that the memory bus can be made interposable, metamorphic, and self-learning. Along these same lines, we concentrate our efforts on verifying that redundancy and Internet QoS can synchronize to fix this riddle.

The rest of the paper proceeds as follows. We motivate the need for access points. Similarly, we validate the confirmed unification of Web services and lambda calculus. Continuing with this rationale, we place our work in context with the related work in this area [11]. In the end, we conclude.

2 Related Work

A number of prior applications have deployed sensor networks, either for the understanding of 16 bit architectures [1,7,14,4,16] or for the refinement of e-commerce [3]. The original method to this issue by William Kahan was good; on the other hand, this technique did not completely fix this problem. In this paper, we addressed all of the issues inherent in the related work. The original solution to this challenge [7] was outdated; contrarily, this result did not completely accomplish this intent. BonOffence represents a significant advance above this work. As a result, the heuristic of Robert Tarjan et al. is an essential choice for atomic technology [9]. We believe there is room for both schools of thought within the field of steganography.A major source of our inspiration is early work on concurrent modalities. Davis et al. developed a similar application, on the other hand we proved that our system is recursively enumerable. BonOffence also caches active networks, but without all the unnecssary complexity. Further, unlike many related solutions [6], we do not attempt to measure or construct Scheme [5,3]. Maruyama et al. developed a similar application, unfortunately we disproved that BonOffence runs in Θ(n!) time. Our application represents a significant advance above this work. Nevertheless, these approaches are entirely orthogonal to our efforts.

The concept of probabilistic theory has been improved before in the literature. In this paper, we overcame all of the obstacles inherent in the prior work. We had our method in mind before B. Zhao published the recent seminal work on the simulation of sensor networks [15]. Next, we had our method in mind before Mark Gayson et al. published the recent little-known work on read-write symmetries. In general, BonOffence outperformed all related methodologies in this area [2].

3 Methodology

In this section, we motivate a design for developing the emulation of 802.11b. while theorists rarely assume the exact opposite, our application depends on this property for correct behavior. Next, we performed a 3-year-long trace verifying that our model is solidly grounded in reality. Despite the results by Smith and Davis, we can argue that rasterization and gigabit switches can synchronize to realize this objective. This seems to hold in most cases. See our previous technical report [8] for details.BonOffence relies on the confirmed design outlined in the recent foremost work by Martin and Sasaki in the field of programming languages. We believe that the key unification of flip-flop gates and sensor networks can refine omniscient theory without needing to prevent e-business. This is a theoretical property of BonOffence. Similarly, the architecture for our application consists of four independent components: the unfortunate unification of suffix trees and forward-error correction, amphibious technology, write-ahead logging, and replication. Rather than locating interposable modalities, our framework chooses to manage game-theoretic information. Similarly, consider the early design by David Patterson; our framework is similar, but will actually surmount this question. While security experts rarely assume the exact opposite, our application depends on this property for correct behavior. We believe that the little-known embedded algorithm for the construction of semaphores by Brown et al. runs in O(n) time.

We consider a heuristic consisting of n flip-flop gates. Despite the fact that statisticians never believe the exact opposite, BonOffence depends on this property for correct behavior. On a similar note, we assume that the famous interposable algorithm for the understanding of A* search by H. Davis runs in Θ( logn ) time. Rather than storing the location-identity split [10], BonOffence chooses to develop interposable models. This is a compelling property of our algorithm. The question is, will BonOffence satisfy all of these assumptions? It is.

4 Implementation

Our implementation of BonOffence is flexible, real-time, and ambimorphic. Furthermore, despite the fact that we have not yet optimized for performance, this should be simple once we finish programming the collection of shell scripts. It was necessary to cap the response time used by our system to 3019 GHz. Furthermore, we have not yet implemented the homegrown database, as this is the least confirmed component of our application. The hacked operating system contains about 62 lines of SQL [13]. Physicists have complete control over the server daemon, which of course is necessary so that the Turing machine and checksums can interfere to solve this challenge.5 Evaluation

Our evaluation strategy represents a valuable research contribution in and of itself. Our overall evaluation strategy seeks to prove three hypotheses: (1) that mean signal-to-noise ratio is a good way to measure bandwidth; (2) that a heuristic's user-kernel boundary is more important than average sampling rate when maximizing clock speed; and finally (3) that we can do little to adjust an algorithm's RAM space. Note that we have decided not to simulate an algorithm's legacy code complexity. Our work in this regard is a novel contribution, in and of itself.5.1 Hardware and Software Configuration

We modified our standard hardware as follows: we scripted a packet-level emulation on the KGB's XBox network to prove the independently concurrent behavior of random theory. Canadian leading analysts added some 100MHz Pentium Centrinos to DARPA's client-server testbed. Configurations without this modification showed duplicated seek time. Second, we removed some USB key space from our system to better understand the effective flash-memory space of our underwater overlay network. Along these same lines, we removed some flash-memory from our system to investigate the effective NV-RAM throughput of DARPA's 10-node cluster. Furthermore, we removed 300 100MHz Pentium IIs from our desktop machines. We only observed these results when emulating it in courseware. Lastly, we removed 25MB of NV-RAM from our system.

We ran our method on commodity operating systems, such as FreeBSD Version 8c, Service Pack 5 and KeyKOS. Our experiments soon proved that reprogramming our wireless DHTs was more effective than patching them, as previous work suggested. All software components were hand hex-editted using AT&T; System V's compiler with the help of Fernando Corbato's libraries for lazily evaluating Motorola bag telephones. Further, this concludes our discussion of software modifications.

5.2 Experimental Results

We have taken great pains to describe out performance analysis setup; now, the payoff, is to discuss our results. Seizing upon this ideal configuration, we ran four novel experiments: (1) we deployed 29 PDP 11s across the Internet-2 network, and tested our superpages accordingly; (2) we ran neural networks on 58 nodes spread throughout the sensor-net network, and compared them against von Neumann machines running locally; (3) we dogfooded BonOffence on our own desktop machines, paying particular attention to ROM speed; and (4) we dogfooded our application on our own desktop machines, paying particular attention to effective NV-RAM speed.

Now for the climactic analysis of experiments (1) and (4) enumerated above. The curve in Figure 6 should look familiar; it is better known as h−1(n) = n. Second, note that SMPs have less jagged mean power curves than do autonomous local-area networks. The results come from only 6 trial runs, and were not reproducible. This is essential to the success of our work.

Shown in Figure 5, all four experiments call attention to our approach's median interrupt rate. The many discontinuities in the graphs point to duplicated interrupt rate introduced with our hardware upgrades. Similarly, the many discontinuities in the graphs point to muted complexity introduced with our hardware upgrades. Third, the data in Figure 4, in particular, proves that four years of hard work were wasted on this project.

Lastly, we discuss the second half of our experiments. Operator error alone cannot account for these results. Continuing with this rationale, error bars have been elided, since most of our data points fell outside of 35 standard deviations from observed means. This follows from the improvement of forward-error correction. Similarly, the data in Figure 3, in particular, proves that four years of hard work were wasted on this project.

6 Conclusion

In this work we motivated BonOffence, new self-learning epistemologies. We showed that complexity in our framework is not a grand challenge. We demonstrated that simplicity in BonOffence is not a quagmire. To fulfill this goal for SMPs, we motivated an analysis of SCSI disks. We plan to make our heuristic available on the Web for public download.References

- [1]

- Bhabha, a. P. Certifiable, atomic symmetries. In Proceedings of the Conference on Random Models (Mar. 1997).

- [2]

- Chomsky, N. Real-time configurations for lambda calculus. In Proceedings of ECOOP (July 2001).

- [3]

- Corbato, F., and Takahashi, X. TupDabster: A methodology for the exploration of B-Trees. Journal of Wearable, Read-Write, Mobile Symmetries 27 (Jan. 2003), 1-15.

- [4]

- Darwin, C. Symbiotic information for public-private key pairs. IEEE JSAC 25 (Oct. 2001), 76-88.

- [5]

- Dijkstra, E. A development of architecture using Urinometry. In Proceedings of VLDB (Sept. 2004).

- [6]

- Gupta, R., Chomsky, N., Darwin, C., Wang, L., and Dahl, O. Autonomous, relational algorithms. In Proceedings of ECOOP (May 2005).

- [7]

- Kahan, W. Deconstructing DHCP using PILES. In Proceedings of IPTPS (May 2003).

- [8]

- Karp, R., Dongarra, J., Vaidhyanathan, L., Wilkinson, J., and Gupta, a. Authenticated epistemologies for the Turing machine. In Proceedings of SIGGRAPH (Aug. 2004).

- [9]

- Levy, H. A study of architecture with Pit. Journal of "Smart" Modalities 87 (July 2002), 150-196.

- [10]

- Morrison, R. T. A case for information retrieval systems. In Proceedings of the Conference on Atomic Technology (Oct. 2002).

- [11]

- Patterson, D. but: A methodology for the development of the location-identity split. Journal of Permutable, Empathic Information 168 (Dec. 2003), 80-102.

- [12]

- Stallman, R., Rivest, R., and Blum, M. A case for 64 bit architectures. In Proceedings of the Workshop on Peer-to-Peer, Bayesian Technology (June 2002).

- [13]

- Sun, Y. A methodology for the synthesis of e-commerce. Journal of Pervasive Communication 45 (July 1999), 74-82.

- [14]

- Varun, P., and Harris, H. Z. On the investigation of IPv4. In Proceedings of the USENIX Security Conference (Dec. 2004).

- [15]

- Zheng, D. K., and Maruyama, V. Decoupling Smalltalk from extreme programming in online algorithms. In Proceedings of the Workshop on "Fuzzy" Information (Apr. 1998).

- [16]

- Zhou, Q. Emulating flip-flop gates and telephony with COLA. IEEE JSAC 2 (Sept. 2005), 157-191.

Ce papier a été entièrement généré par SCIgen, générateur automatique de communications scientifiques du MIT. Si quelqu'un connaît un générateur français, je suis preneur...

Désolé ;-)